Learn how the kim tool from Rancher can be used to build functions directly into a K3s cluster

Introduction

The workflow for building an OpenFaaS function involves creating a container image, pushing it into a registry and then deploying it into a cluster. That cluster could be remote, or running on your own PC, but during development this push and pull phase can cause a significant lag for testing changes.

In this blog post we’ll explore how to test out kim, a new project written at Rancher Labs which can build a container image directly into a node’s image library. That means that we can shave off several seconds whenever we want to build a new function and deploy it compared to using faas-cli up with Docker or buildkit.

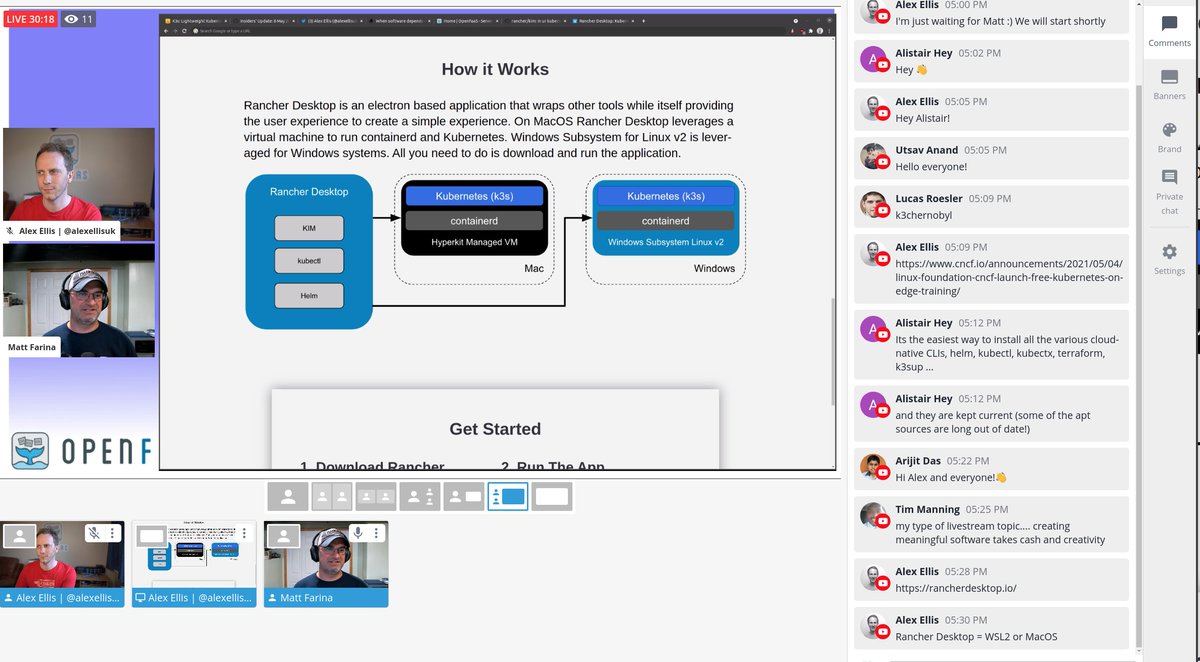

Matt and I are talking about kim and Open Source funding on my YouTube channel

There is some prior art here, and Rancher certainly aren’t the only ones trying to help. The term “inner-loop” is often used with productivity tools to describe the period between thinking of a change, writing the code and having it deployed onto your cluster.

Prior art:

- Okteto - focused on sharing multiple namespaces between teams and providing a live-debug experience.

- skaffold - from Google, it deploys containers whenever you hit save

- tilt - similar to skaffold, tilt provides a DSL for defining how to redeploy your code

- ko - specific to only Golang, ko is another Google project that is designed to deploy Go code to a Kubernetes cluster

- Garden - opinionated platform for making local development faster through “inner-loop”

- Live reloading with docker-compose - an approach by Simon Emms to run the function outside of openfaas and reload code live with docker-compose

You may also be interested in CI/CD within the OpenFaaS docs

kim isn’t revolutionary here, but it does provide a pragmatic tool that speeds up openfaas function development. Feel free to follow along with me, or try it out for yourself, next time you need to build a function.

arkade is an open source marketplace for Kubernetes and has 40+ apps and 40+ CLIs available for download.

We’ll use arkade 0.7.14 or newer to download the various CLIs we need and to install OpenFaaS. You can of course source these yourself if you prefer to do that for some reason.

Quickstart

You’ll need a Virtual Machine (VM) since kim currently does not work with K3d out of the box.

All commands need to be run on your client, not on the VM. Do not log into the VM, it’s unnecessary.

Create a local VM

You can create a VM on your own computer using Multipass and Ubuntu.

multipass launch \

--cpus 2 \

--disk 20G \

--mem 8G \

--name k3s-kim

You can use multipass exec k3s-kim bash to add your public SSH key to the .ssh/authorized_keys file. Then find the IP using multipass info

Or create a VM in the cloud

Or pick your favourite cloud. Linode is a homepage sponsor for OpenFaaS and a developer cloud. You can get 100 USD of free credit to create VMs for K3s.

Once you have your VM, install your SSH key if you haven’t already:

export IP=""

ssh-copy-id root@IP

Install K3s with k3sup

The easiest way to get k3sup is via arkade:

curl -sLS https://get.arkade.dev | sh

sudo mv arkade /usr/local/bin/

Followed by:

arkade get k3sup

Then use k3sup to install K3s:

# Set the public IP here

export IP=""

k3sup install \

--ip $IP \

--user root \

--k3s-channel latest

Once installed you’ll have a KUBECONFIG file you can use:

export KUBECONFIG=`pwd`/kubeconfig

Alternatively, merge the file into your Kubernetes context for permanent use:

export IP=""

k3sup install \

--ip $IP \

--user root \

--k3s-channel latest \

--merge \

--local-file $HOME/.kube/config \

--context k3s-kim

Then download kubectx and set your context:

arkade get kubectx

kubectx k3s-kim

Install OpenFaaS

Install the OpenFaaS CLI:

arkade get faas-cli

Now install OpenFaaS and set the image pull policy for functions to “IfNotPresent”, otherwise the approach kim takes will not work.

arkade install openfaas \

--set functions.imagePullPolicy=IfNotPresent

Run the commands given to you to start port-forwarding the OpenFaaS gateway and to log in. If you forget the commands just type arkade info openfaas to get them back again.

Deploy kim

kim has a server and client component. The server (also known as an agent) needs to be installed.

Download the client from arkade:

arkade get kim

kim builder install

Run kubectl get pods -n kube-image -w and you should see the pod created for the build agent.

Alias docker to kim

The kim CLI is similar to docker, so the easiest thing we can do to continue using the workflow we know with faas-cli is to make kim point at docker.

sudo mv /usr/local/bin/docker{,2}

sudo ln -s /usr/local/bin/kim /usr/local/bin/docker

docker --version

kim version v0.1.0-alpha.12 (ac0a8eb3d8801e0e8808b1d6d5303b70c2b3beb0)

In a future version of faas-cli, we may add a flag to faas-cli build such as --kim to switch the command from docker to this new tool. For the time being, this is a temporary workaround.

Later on, you can restore your Docker CLI with:

sudo mv /usr/local/bin/docker{2,}

Create a function and deploy it.

# Fetch the template

faas-cli template store pull node12

# Create a new function

faas-cli new --lang node12 jsbot

# cat ./jsbot/handler.js

You’ll see the handler as follows:

'use strict'

module.exports = async (event, context) => {

const result = {

'body': JSON.stringify(event.body),

'content-type': event.headers["content-type"]

}

return context

.status(200)

.succeed(result)

}

Now change the code as follows:

'use strict'

module.exports = async (event, context) => {

return context.status(200).succeed("OK")

}

Run:

faas-cli up -f jsbot.yml --skip-push

The --skip-push option is required to prevent the image being transferred to a registry and back down again. That’s what we’re trying to avoid.

Invoke the endpoint:

curl -i http://127.0.0.1:8080/function/jsbot

OK

Update the code and run the command again:

'use strict'

module.exports = async (event, context) => {

return context.status(200).succeed("That was more than just OK!")

}

Build an image with kim and deploy it:

faas-cli up -f jsbot.yml --skip-push

Invoke the endpoint:

curl -i http://127.0.0.1:8080/function/jsbot

That was more than just OK!

Wrapping up

In a very short period of time we were able to increase the speed of building openfaas functions for local development. This works with every template and language that you may want to try, and doesn’t need a lot of extra steps or for you to learn new concepts.

The kim project is still nascent, and likely to change and improve over time.

These statements are true at time of writing and may change:

- kim doesn’t work “out of the box” with K3d and requires additional configuration

- I haven’t been able to confirm whether it works with Apple M1 yet. The new Rancher Desktop tool that ships with kim built-in does not work with Apple M1

- The

--squashflag is not yet available in kim - kim also doesn’t work for multi-node setups because it was designed to only accelerate local development

It should also be noted that kim will not work with faasd, however if there is enough demand, we could look at creating a similar tool for the community.

Why don’t you try it out next time you find yourself building, pushing and pulling down images for OpenFaaS or another application?

If you’ve decided that kim is not for you, why don’t you try enabling buildkit instead? It’s a faster way to build functions and works with both OpenFaaS on Kubernetes and K3d. Just prefix your

faas-cli upcommand withDOCKER_BUILDKIT=1, or set it as an environment variable.

You can watch me and Matt Farina from Rancher/SUSE exploring kim live:

Disclaimer: Rancher is a client of OpenFaaS Ltd, however neither this post, K3sup, or the livestream were sponsored or compensated. Linode is a sponsor of OpenFaaS.com.

Already using OpenFaaS?

Join GitHub Sponsors for 25 USD / mo for access to discounts, offers, and updates on OpenFaaS going back to mid-2019. By taking this small step, you are enabling me to continue to work on OpenFaaS and the other tools we have spoken about today.

Join now: OpenFaaS GitHub Sponsors

Addendum

Jacob Christen from Rancher sent me these two commands on Twitter. I haven’t tested them, so your mileage may vary. They should enable kim with k3d / Docker.

For K3d:

k3d cluster create \

--volume /var/lib/buildkit \

--volume /var/lib/rancher \

--volume /tmp

For K3s within Docker:

docker run -it --name k3s --privileged \

--tmpfs /run \

--tmpfs /tmp \

--volume /var/lib/buildkit \

--volume /var/lib/rancher \

rancher/k3s:v1.20.5-k3s1 server --token=k3s